Association of Patient Volume With Online Ratings of California Urologists (PDF)

Background: Online reviews are an increasingly popular tool for patients to evaluate and choose physicians. Although the accuracy, utility, and meaning of online reviews are debated by physicians, the patient perspective is a valued component of the physician-patient relationship and is likely to increase in importance. Reliable online reviews provide guidance for health care consumers as well as feedback to physicians. Online reviews are influenced by many factors, including patient wait times; however, little else is known about physician practice patterns and their effect on reviews. We evaluated Medicare billing data and online reviews of urologists in California, with the hypothesis that urologists with higher-volume practices would have lower patient ratings, potentially owing to shorter physician-patient interactions and increased wait times.

Methods: We retrospectively reviewed Medicare data from January 1 to December 31, 2014, on the 665 urologists in California. We obtained data from propublica.com on patients receiving Medicare that tracked physician billing and reimbursement, including the number of patients seen and number of services billed. We also recorded sex and practice setting (academic vs private practice) of each urologist. Practice settings were considered academic if they were associated with a residency training program. The number of reviews and mean score (range, 1-5, where 1 indicates the poorest rating and 5 indicates the best rating) were then obtained from 4 websites (Ratemd.com, Healthgrades.com, Vitals.com, and Yelp.com). We compared urologists’ weighted ratings and stratified by number of patients seen, Medicare services billed, sex, and practice setting. Data analysis was performed from January 1 to June 30, 2017. Univariable and multivariable linear regression were performed. Confounding variables were chosen a priori for each analysis and included in the multivariable model. A Wilcoxon-type test was used to test for trend. All tests were 2-sided and P < .05 was considered significant. The study was approved by the University of California–San Francisco institutional review board, who granted a waiver of informed consent as the data accessed were public data and informed consent was deemed unnecessary.

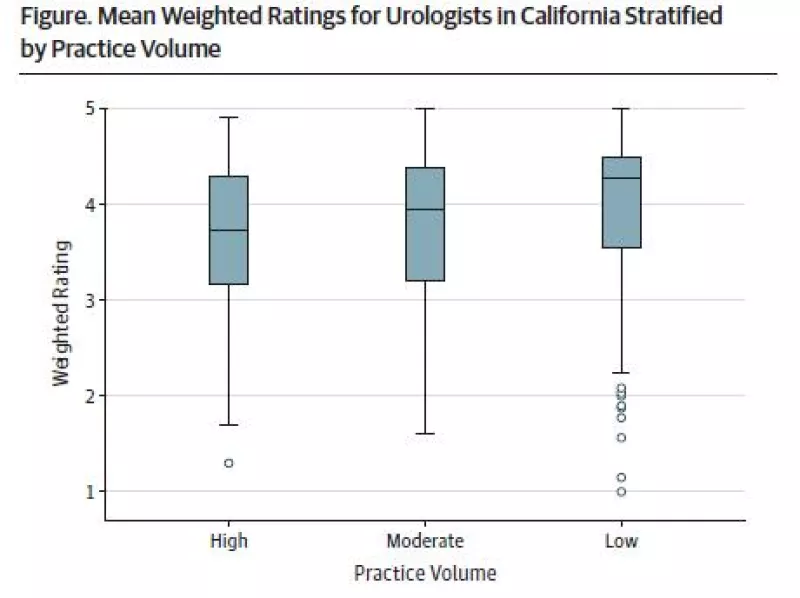

Results: Of the 665 urologists in California with Medicare patients in 2014, the mean total number of reviews in the 4 websites combined was 10, and 651 urologists had at least 1 rating. Ratemd.com had 325 reviews, Healthgrades.com had 600 reviews, Vitals.com had 604 reviews, and Yelp.com had 236 reviews. Among the study sample, there were 600 male urologists and 581 urologists who worked in a nonacademic setting. Mean weighted ratings for academic urologists were 4.2 (95% CI, 4-4.3), compared with 3.7 (95% CI, 3.6-3.8) for their nonacademic peers (P < .001). The Figure demonstrates the difference in ratings for academic and private practice urologists broken down into tertiles by number of patients seen. Female urologists had similar mean weighted ratings compared with men (3.9 [95% CI, 3.7-4.2] vs 3.8 [95% CI, 3.7-3.8]; P = .10).

The median number of Medicare patients seen per physician in 2014 was 426 (interquartile range, 241-693), with 2293 (interquartile range, 845-5139) total services billed. There was a significant trend toward higher ratings for urologists who saw fewer Medicare patients, using a Wilcoxon-type test for trend.

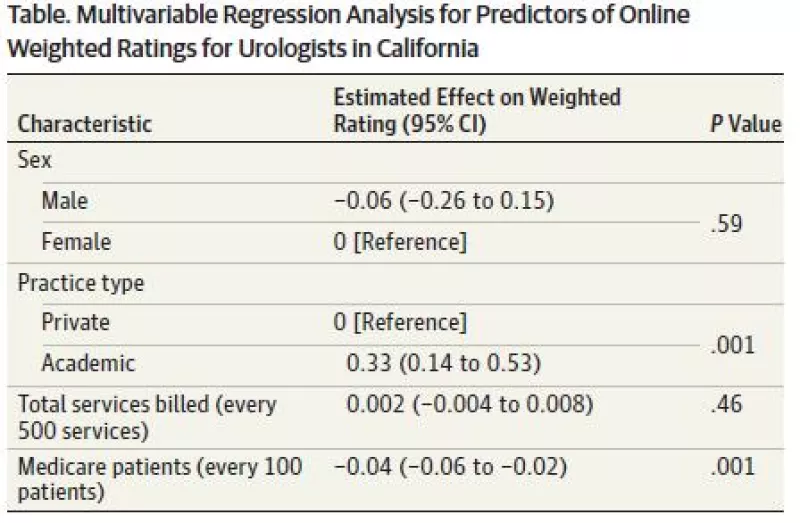

The multivariable analysis of ratings controlled for sex, practice setting, and total services billed (Table). Academic physicians were associated with higher scores, while an increase in Medicare patient load was associated with poorer ratings. For every 100 patients seen, ratings were lowered by 0.04 (P = .001). More services billed was associated with lower ratings in univariable analysis, but this was not significant in our multivariable model.

Limitations of this study include the use of propublica.com Medicare data, which may not accurately represent a physician’s non-Medicare patient population.

Discussion: Online patient ratings for urologists in California were lower for those with higher-volume practices. Research in other specialties suggests that physicians with busier practices have longer wait times and spend less time with patients, which are major drivers of ratings.4 Univariable analysis suggested that ratings were actually poorer for doctors who billed for more services. For urologists, many of these services are invasive procedures, which may contribute to lower ratings.

Female urologists had no difference in ratings from their male counterparts, although other fields have shown higher ratings for women.5 The large difference between ratings for academic and nonacademic urologists was surprising. The perception of seeing an expert in a particular subspecialty may be appealing to patients and drive higher ratings. More research is needed to determine what factors lead to more satisfied patients.